The data centre industry has spent years fine-tuning how it refers to power and cooling. It has become normal to talk about it in terms of efficiency, power usage effectiveness (PUE) and how to make facilities cleaner, faster and more scalable. But there’s one part of the infrastructure conversation that still doesn’t get the attention it deserves. The network switch.

This might sound like a niche concern, but in today’s IT infrastructure environment where workloads are growing more complex and unpredictable, switching is no longer a background function. It’s becoming a make-or-break component of how well a data centre performs, and how efficiently it can scale.

Why switches matter more than acknowledged

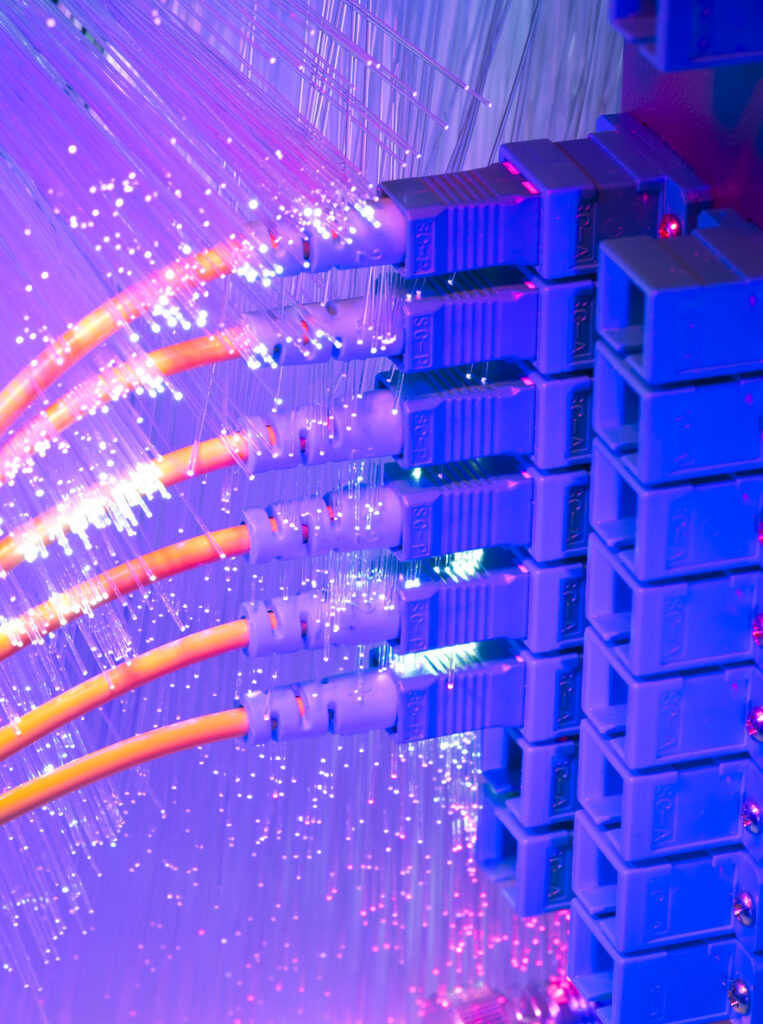

According to Exploding Topics, approximately 402.74 million terabytes of data are created each day, and 181 zettabytes of data is expected to be generated in 2025. This data is moving inside a data centre between storage arrays, compute nodes, graphics processing unit (GPU) clusters and virtual machines. Every bit of that data needs to go through a switch to get from one place to another. In a typical setup, switches convert those signals from light to electricity. They use this to make a routing decision, and then convert back to light for onward transmission.

Although this might not sound like much, it’s happening millions of times per second, across thousands of connections. And, of course, all that switching uses energy, and all that energy produces heat.

If the facility is running dense AI workloads, supporting financial services, or delivering real-time analytics, the volume and speed of data movement explodes. That puts pressure not only on the compute and storage layers, but also on the network. And if the switches can’t keep up without drawing huge amounts of power and generating excess heat, everything downstream, especially cooling, gets more expensive and more difficult to manage.

The hidden energy cost of switching

What’s surprising is just how significant switching can be when it comes to overall energy use. In many high-performance environments, the power consumed by traditional switches is now becoming a meaningful percentage of the site’s total energy budget. According to NVIDIA, switching in data centres handling dynamic AI workloads typically makes up 8% of energy consumption. It’s not something that used to be a concern. But, as rack densities climb and data centres try to push more performance per square foot, any and all inefficiencies at the network layer start to add up.

An added challenge of switches is that the heat they generate doesn’t just vanish, it has to be removed making the cooling system work harder. This in turn draws more power and becomes a cycle that chips away at efficiency goals.

A different way to move data

This is where optical switching can make a difference. Rather than converting data back and forth between light and electricity, optical switches keep it in the light domain for the whole journey with no unnecessary conversions, no extra heat, and dramatically lower energy consumption.

One company working on this challenge is UK innovator Finchetto. The company has developed an all-optical, packet-level switch that can be deployed directly in the rack. Unlike traditional switches, it doesn’t need power to make switching decisions. It just routes data using light alone. That means lower power draw, lower latency and less heat for the cooling system to deal with.

The implications go beyond performance. If switches generate less heat, cooling strategies can be designed around higher-density loads. Airflow can be simplified, and racks can be packed closer together. In other words, smarter switching has a knock-on effect on every other part of the infrastructure.

From pain point to performance gain

By no means is switching suddenly the only thing that matters. However, it’s part of a larger pattern that is evolving across the industry. As the demands on data centres evolve, power, cooling, and connectivity cannot be considered in isolation – they’re all connected.

When a switch becomes more efficient, it reduces the burden on power. That makes backup provisioning simpler, and it eases demand on the cooling system, which might allow heat reuse. It also improves the performance of AI clusters or other latency-sensitive applications.

Switching used to be something that was optimised at the margins. Now it’s something that needs to be designed around.

Making new tech deployable in the real world

Of course, no operator wants to rip out and replace the network fabric just because something better has come along. That’s why the best switching innovations are the ones that fit into what’s already there. For example: those that work with standard protocols and can be dropped into existing spine-and-leaf topologies without rewriting the whole network map.

This allows for gradual adoption, first deploying high-intensity pods or test environments and then building out from there. There is no need to choose between innovation and reliability – both can be achieved.

Switching as part of the sustainability toolkit

Sustainability remains a top priority for the industry. It’s driving procurement decisions, investor expectations, and regulatory frameworks. And while much of the focus is still on renewable energy and PUE, there’s a growing realisation that efficiency starts with smart design.

By cutting energy use at the switching layer, and reducing the amount of waste heat produced, operators can improve their environmental performance without compromising capability. And unlike some sustainability measures, switching improvements don’t require major behavioural change or offsets. They’re architectural, they’re measurable, and importantly, they can be planned.

The next generation of data centres won’t just be bigger, they’ll be more adaptable, more modular and more responsive to workload changes. That kind of infrastructure needs a network fabric that doesn’t drag behind the rest of the stack.

Obviously switching isn’t the only challenge operators face, but it’s one of the few places where a rethink can deliver benefits across the board. If we want to get serious about building data centres that are genuinely future-ready, switching should be a key consideration.